NASA technology enables accurate landing and avoiding unmanned hazards

by

The New Shepard (NS) booster lands after this car’s fifth flight during NS-11 May 2, 2019. Credit: Blue Origin

Some of the most interesting places to study in our solar system are in the most extreme environments – but landing on any planetary object is truly a risky proposition. With NASA Plan automated and crew missions to new locations on the moon and Mars, Avoiding landing on a steep slope in a crater or in a rocky field is critical to help ensure safe contact with exploring the surface of other worlds. In order to improve landing safety, NASA is developing and testing a range of precision landing and hazard avoidance techniques.

A combination of laser sensors, a camera, a high-speed computer, and advanced algorithms will give the spacecraft artificial eyes and analytical ability to find a specific landing area, identify potential hazards, and adjust the path to the safest landing site. The technologies developed under the Safe and Accurate Landing Project – Integrated Capability Development (SPLICE) within the Space Technology Mission Directorate’s Game Change Development Program will eventually allow the spacecraft to avoid rocks, potholes and more within landing zones half the size of a target soccer field Indeed, it is considered relatively safe.

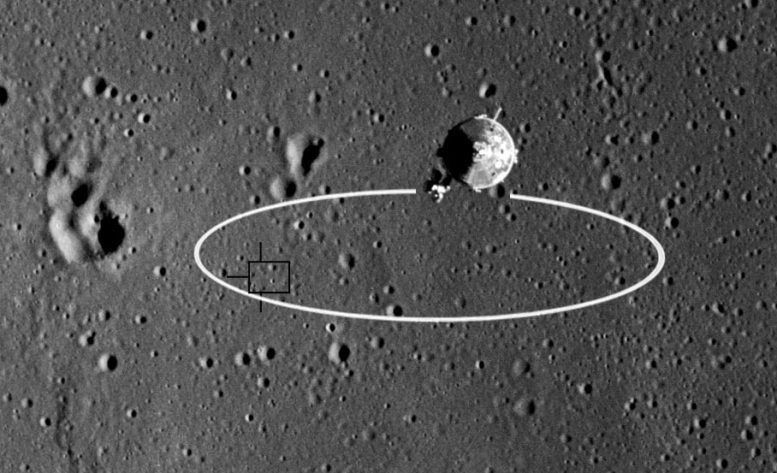

A new set of lunar landing technologies, called Safe and Accurate Landing – Evolution of Integrated Capabilities (SPLICE), will enable safer and more accurate landings on the surface of the Moon than ever before. Future moon missions could use NASA’s advanced SPLICE algorithms and sensors to target landing sites that would not have been possible during the Apollo missions, such as areas with dangerous rocks and nearby shaded craters. SPLICE technologies could also help humans land on Mars. Credit: NASA

Three of SPLICE’s four major subsystems will have their first integrated test flight on the Blue Origin New Shepard missile during an upcoming mission. As the missile booster returns to Earth, after reaching the boundary between the Earth’s atmosphere and space, the terrain proportional navigation system, the Doppler lidar navigation system, the landing and landing computer on board the booster will operate. Each of them will operate in the same way as they will when approaching the surface of the moon.

SPLICE’s fourth major component, the Hazard Detector, will be tested in the future with ground and flight tests.

After the bread crumbs

When choosing a site for exploration, part of the consideration is to ensure sufficient space for a spacecraft to land. The size of the area, called a drop ellipse, reveals the imprecise nature of the ancient landing technique. The target landing area for Apollo 11 in 1968 was about 11 miles by 3 miles, and astronauts piloted the probe. Subsequent robotic missions to Mars are designed for self-landing. The Vikings arrived on the red planet 10 years later with an ellipse of 174 miles by 62 miles.

The ellipse of the Apollo 11 landing, shown here, was 11 miles by 3 miles. Precision landing technology will drastically reduce the landing area, allowing multiple missions to land in the same area. Credit: NASA

Technology improved, and subsequent independent landing zones decreased in size. In 2012, the curiosity landing ellipse dropped to 12 miles by 4 miles.

The ability to locate a landing site will help future missions on target areas for new scientific explorations at sites previously considered too dangerous for unmanned landings. It will also enable advanced supply missions to send goods and supplies to one location, instead of spreading out miles.

Every planetary body has its own unique conditions. That’s why “SPLICE is designed to integrate with any spacecraft that lands on a planet or moon,” said Project Manager Ron Sostarik. From his headquarters at NASA’s Johnson Space Center in Houston, Sostarik explained that the project spans several centers across the agency.

Relative terrain navigation provides a measure of navigation by comparing real-time images with maps known to surface features during descent. Credit: NASA

“What we’re building is a complete landing and landing system that will work for future Artemis missions to the moon and can be adapted to Mars,” he said. “Our job is to bring the individual components together and make sure they function as an effective system.”

Atmospheric conditions may vary, but the landing and descent process is the same. A SPLICE computer was programmed to activate proportional terrain navigation several miles above the ground. The built-in camera shoots the surface, capturing up to 10 photos every second. It is constantly inserted into the computer, and it is preloaded with satellite imagery of the landing field and a database of known landmarks.

The algorithms search the images in real time for known features to locate the spacecraft and safely navigate the spacecraft to the expected landing point. It’s similar to navigating through landmarks, such as buildings, rather than street names.

Likewise, the relative navigation of the terrain determines the location of the spacecraft and sends that information to the guidance and control computer, which is responsible for carrying out the flight path to the surface. The computer will know roughly when the spacecraft should approach its target, roughly like placing breadcrumbs and then following it to the final destination.

This process continues for approximately four miles above the surface.

Laser navigation

Knowledge of the spacecraft’s exact location is essential for the calculations needed to plan and implement an automated landing for an accurate landing. Halfway through the descent, the computer triggers the Doppler lidar navigation system to measure velocity and range measurements which add to the precise navigation information coming from the relative navigation of the terrain. Lidar (Light Detection and Range Finding) works the same way as radar but uses light waves instead of radio waves. Three laser beams, each narrow like a pencil, are directed toward the ground. Light from these beams bounces off the surface, deflecting the direction of the spacecraft.

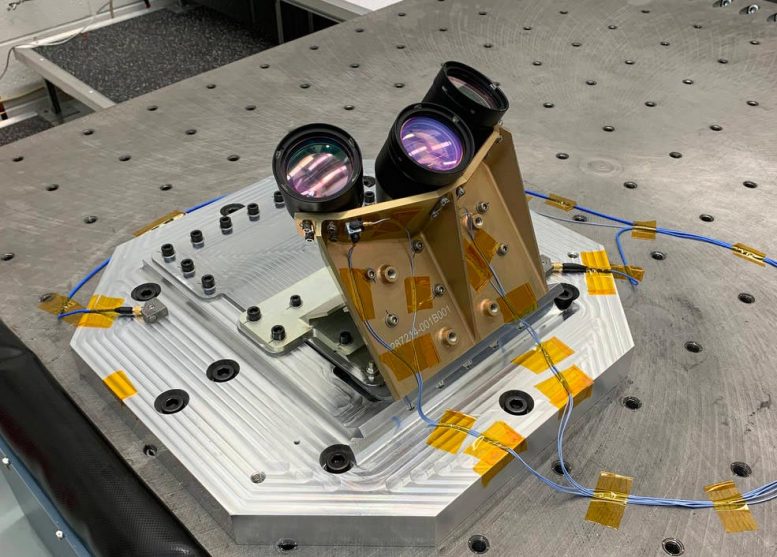

NASA’s Doppler Lidar Navigation Instrument consists of a chassis, which contains electronic and electro-optical components, and an optical head with three telescopes. Credit: NASA

The travel time and wavelength of this reflected light are used to calculate the distance the spacecraft is from the Earth, the direction it is heading, and how fast it is moving. These calculations are made 20 times per second for all three laser beams and are entered into the pointing computer.

Doppler lidar works successfully on Earth. However, Farzin Amzajerdian, co-inventor and lead researcher from NASA’s Langley Research Center in Hampton, Virginia, is responsible for meeting the challenges of use in space.

He said, “There is still some unknown about the amount of signal that will be coming from the surface of the moon and Mars.” If the material on the ground is not very reflective, the signal to the sensors will be weaker. But Amzajerdian is confident that lidar will outperform radar technology because the laser frequency is much greater than radio waves, allowing greater accuracy and more efficient sensing.

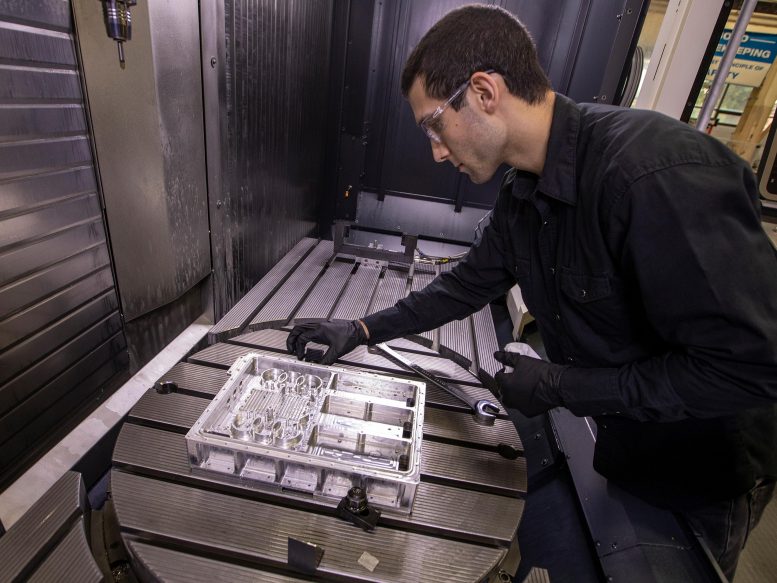

Langley engineer John Savage inspects a section of the Doppler Lidar navigational unit after it has been manufactured from a block of metal. Credit: NASA / David C. Bowman

The backbone responsible for managing all this data is the landing gear and the landing gear. Navigation data is fed from sensor systems into on-board algorithms, which calculate new routes for a precise landing.

Computer power

The landing and landing computer synchronizes the functionality and data management of individual SPLICE components. It should also integrate seamlessly with other systems on any spacecraft. Therefore, this small computing center keeps accurate landing techniques from overloading the base trip computer.

Early identified computational needs made it clear that current computers are insufficient. NASA’s high-performance space computing processor will meet the demand but still several years to come. An interim solution was needed to equip SPLICE for the first flight test of the sub-orbital missile using Blue Origin on the New Shepard missile. The data from the performance of the new computer will help shape its final replacement.

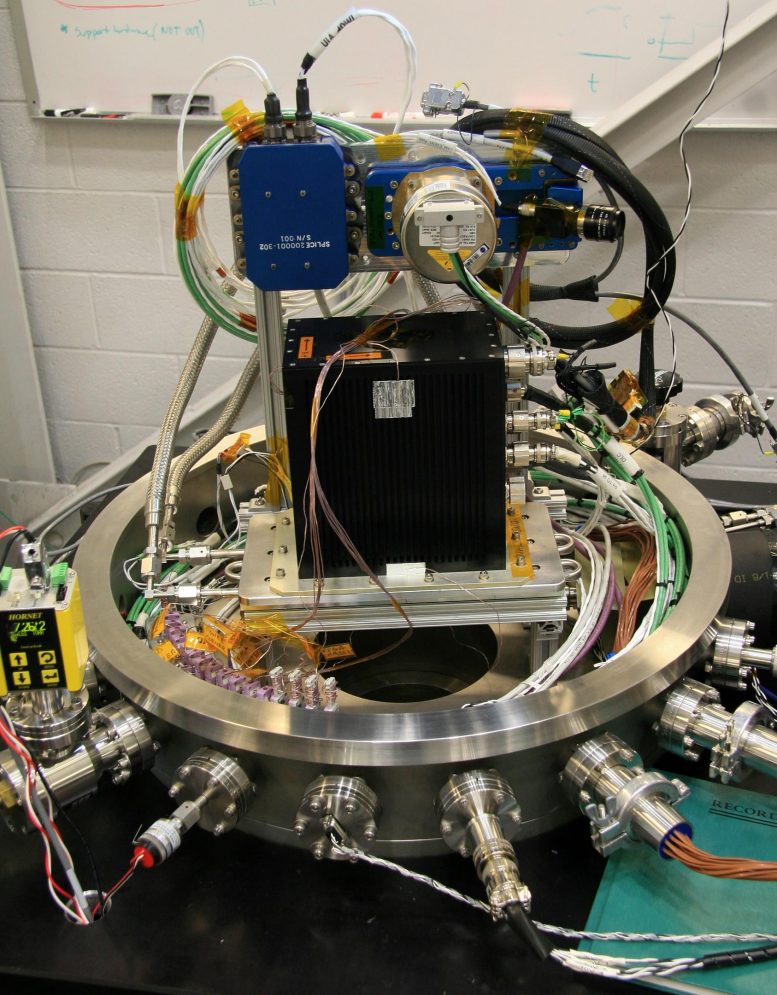

SPLICE devices undergo vacuum chamber testing preparations. Three of SPLICE’s four major subsystems will have their first integrated test flight on the Blue Origin New Shepard missile. Credit: NASA

“The replacement computer has a very similar processing technology, which informs both the design of the future high-speed computer, as well as the future integration efforts of the computer and the landing,” explained John Carson, Director of Technical Integration for Micro Landing.

Looking to the future, test missions like these will help shape safe landing systems for missions by NASA and commercial suppliers on the surface of the Moon and other solar system objects.

“Landing safely and precisely in another world still faces many challenges,” Carson said. “There’s no commercial technology yet that you can go out and buy. Every future surface mission can use this precision landing capability, so a NASA meeting needs it now. And we’re promoting transportation and use with our industry partners.”

Communicator. Reader. Hipster-friendly introvert. General zombie specialist. Tv trailblazer